Propaganda Fact-Checking Tool

Check a News Claim

Analysis Results

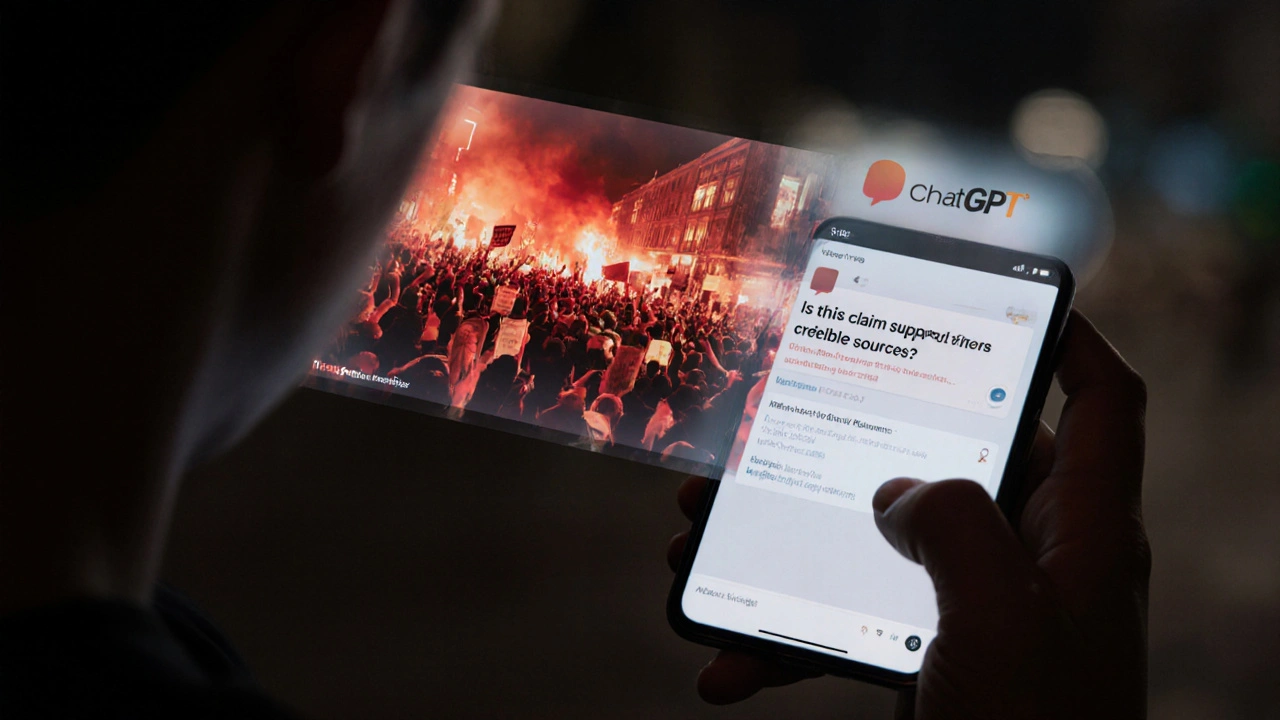

You open your phone. A viral video shows a protest turning violent. The caption screams: "This is what the government is hiding!" You feel angry. You want to share it. But something stops you. Was this really captured live? Or was it edited, taken out of context, or even made up? This is propaganda - and it’s not just on TV anymore. It’s in your feed, your inbox, your group chats. And it’s getting smarter.

Back in the 1940s, propaganda meant posters, radio speeches, and state-controlled newspapers. Today, it’s algorithms serving you emotionally charged clips that match your fears. It’s AI-generated voices pretending to be politicians. It’s deepfakes of celebrities endorsing products you’ve never heard of. The tools to create it are now free. And the people behind it? They don’t need a government budget. Just a laptop and a few hours.

So how do you fight back? You don’t need a journalism degree. You don’t need to become a tech expert. You just need to ask better questions. And one of the most powerful tools you already have? ChatGPT.

What Propaganda Really Looks Like Today

Propaganda isn’t always obvious lies. Often, it’s half-truths wrapped in urgency. A post says: "78% of people in this city lost their jobs after the new law passed." Sounds alarming. But where’s the data? Who counted? When? What about the other 22%? Without context, it’s not news - it’s manipulation.

Real propaganda has three clear traits:

- It targets emotion, not logic. Fear, anger, or outrage are its favorite triggers.

- It simplifies complex issues into good vs. evil. No gray areas. No nuance.

- It demands action - share, sign, donate, hate - before you think.

Think about the last time you saw a post saying: "They don’t want you to know this!" That’s not a clue. That’s a red flag. Real journalism doesn’t hide facts. It explains them.

How ChatGPT Can Help You Uncover the Truth

ChatGPT doesn’t magically know if something is true. But it can help you check things fast - if you know how to ask.

Here’s how to use it like a fact-checker:

- Copy the claim - the headline, the quote, the statistic.

- Paste it into ChatGPT and ask: "Is this claim supported by credible sources? Can you find any reports from reputable outlets like BBC, Reuters, or AP about this?"

- Ask for sources - not just "yes" or "no." Push for names: "Which organizations have reported this?"

- Check the dates - ask: "When was this information first published? Has it been updated?"

- Compare versions - "Can you show me how this claim differs across different countries’ media?"

Try this real example: A TikTok video claims, "The EU banned all plastic bottles in 2025." You paste it into ChatGPT and ask: "Is it true that the EU banned all plastic bottles in 2025?"

ChatGPT responds: "No. The EU banned single-use plastic items like cutlery, plates, and straws in 2021 under the Single-Use Plastics Directive. Bottles are still allowed, but must contain at least 25% recycled plastic by 2025 and 30% by 2030. No full ban exists."

That’s not magic. That’s basic research - done in seconds.

Spotting AI-Generated Propaganda

Now, here’s the twist: AI is being used to create propaganda - not just detect it.

AI tools can now write fake news articles that sound like real journalism. They can clone voices to make someone say something they never said. They can generate photos of events that never happened - like a fire at a hospital or a politician kissing an enemy leader.

How do you catch these?

Ask ChatGPT: "Does this image look like it was generated by AI?" Then paste the image link or describe it in detail. It won’t always be right - but it’ll point out weird details: fingers with too many joints, shadows that don’t match the light source, text that’s gibberish.

Try this: A YouTube video shows a CEO saying, "I’m glad the workers lost their jobs - it was necessary." You play it again. The voice sounds off. The lips don’t quite move right. You paste the transcript into ChatGPT and ask: "Does this quote match the typical speaking style of this CEO?"

ChatGPT checks past interviews, speeches, and public statements. It says: "This CEO has never used phrases like 'it was necessary' in that tone. In all recorded speeches since 2020, they focus on 'transition support' and 'retraining.' This quote is inconsistent with their public communication pattern. High likelihood of manipulation."

That’s not guesswork. That’s pattern recognition - something AI does better than most humans.

Why You Can’t Trust Just One Source

One of the biggest mistakes people make? They check one source and call it done. You don’t check one news site. You check three - and from different countries.

Ask ChatGPT: "How did media in the UK, US, and Germany report on the same event?" It will show you differences in tone, emphasis, and framing.

For example, after a major protest in Brazil in 2024, UK media focused on police response. US media highlighted economic demands. German media covered the role of youth organizations. None were wrong. But each left out parts of the story.

Propaganda thrives when you only see one version. ChatGPT helps you see the full picture - fast.

What Not to Do

ChatGPT isn’t perfect. It can hallucinate. It can give you a confident answer that’s wrong. So here’s what to avoid:

- Don’t treat it as a truth machine. Treat it as a starting point.

- Don’t use it to prove your bias. If you think something is fake, don’t just ask, "Is this fake?" Ask, "What evidence supports this? What contradicts it?"

- Don’t ignore primary sources. If ChatGPT cites a report, go find the original - even if it’s a government website or academic paper.

Also, don’t forget: AI doesn’t have values. It doesn’t care if you’re misled. It just gives you what’s statistically likely. You still have to think.

Real-World Example: The School Lunch Controversy

In early 2025, a viral post claimed: "UK schools are now serving only vegan meals - parents are furious." The post had photos of kids eating tofu stir-fry. Comments exploded: "This is cultural erasure!" "They’re destroying our traditions!"

You paste the claim into ChatGPT and ask: "Are UK schools now required to serve only vegan meals?"

ChatGPT replies: "No. In 2024, the UK government updated school meal guidelines to include more plant-based options and reduce meat for environmental reasons. Schools must still offer at least one meat or dairy option per day. No law bans meat. The claim is misleading and misrepresents policy."

You search for the original Department for Education document. It’s there. Public. Free. The post? Made by a food influencer with a vegan supplement brand.

That’s propaganda. And it cost nothing to expose.

Building Your Own Propaganda Defense System

You don’t need to fact-check everything. But you should build habits:

- Pause before sharing. Ask: "Why am I sharing this? To inform? Or to feel right?"

- Keep a list of 3 trusted sources you check regularly - BBC, Reuters, AP, local newspapers.

- Use ChatGPT to compare headlines. Type: "Show me how [topic] is reported by [Source A] vs [Source B]."

- When you see emotional language - "they’re lying," "you’ve been fooled" - assume it’s propaganda until proven otherwise.

- Teach someone else. One person who learns this can stop a chain of shares.

Propaganda doesn’t win because it’s clever. It wins because people are tired, rushed, and overwhelmed. You don’t need to be faster. You just need to be more careful.

Final Thought: You’re Not Just a Consumer

Every time you share something without checking, you become part of the machine that spreads lies. Every time you pause, ask, and verify - you weaken it.

ChatGPT won’t save you. But it can give you the time you need to think. And in a world full of noise, that’s the most powerful tool you have.

Can ChatGPT detect deepfakes?

ChatGPT can’t directly analyze video or audio files, but it can help you spot signs of deepfakes by asking questions like: "Does this quote match the person’s usual speech patterns?" or "Are there inconsistencies in lighting, lip movement, or background details?" It can also point you to tools like Microsoft Video Authenticator or Intel’s FakeCatcher that analyze media files directly.

Is it safe to use ChatGPT for fact-checking?

Yes - but only if you treat it as a helper, not a final authority. Always cross-check its answers with trusted sources like BBC, Reuters, or official government websites. ChatGPT can make mistakes, especially with recent events. It’s best used to generate questions and point you toward reliable sources, not to give you definitive answers.

What if ChatGPT gives me conflicting answers?

That’s actually a good sign. It means the topic is complex or poorly documented. Instead of picking one answer, ask: "What are the most common sources of disagreement on this topic?" Then look for consensus among independent experts. Conflicting answers from AI often reflect real-world uncertainty - and that’s exactly where you need to dig deeper.

Can I use ChatGPT to check social media posts?

Absolutely. Copy the text from the post - even if it’s just a headline or a quote - and paste it into ChatGPT. Ask: "Is this claim accurate? What do reliable sources say?" It can identify exaggerated numbers, false attributions, and outdated information. For images or videos, describe them in detail and ask: "Does this scene match known facts about the location or event?"

Why does propaganda work so well on social media?

Because social media rewards emotion, not accuracy. Posts that make you angry, scared, or outraged get shared more. Propaganda is designed to trigger those reactions. Algorithms don’t care if it’s true - they care if it keeps you scrolling. That’s why the most viral content is often the most misleading. The solution isn’t to quit social media. It’s to slow down and question what you’re about to share.

Next Steps: Start Small, Think Big

Don’t try to fact-check everything. Pick one post this week that made you angry or surprised. Use ChatGPT to check it. Just once. See what happens.

That’s how change starts - not with grand declarations, but with quiet, skeptical moments. One question. One pause. One truth checked.

I work as a marketing specialist with an emphasis on the digital sphere. I'm passionate about strategizing and executing online marketing campaigns to drive customer engagement and increase sales. In my free time, I maintain a blog about online marketing, imparting insights on trends and tips. I'm dedicated to life-long learning and look forward to growing in my field.